Over the past year or so the Azure upstream open source team has been investing heavily in making serverless Kubernetes a reality. We firmly believe that the Kubernetes operational model can be simplified by removing the burden of managing VMs and by making containers first class compute runtimes on the cloud. From the beginning of this endeavor we focused our energy on an open source project called Virtual Kubelet. Today, we’re taking the next steps in taking Kubernetes autoscaling to the next level by introducing a new project called Osiris that enables scale-to-zero workloads on Kubernetes.

We’ve also noticed a stronger push from the community to answer how Kubernetes workloads can be audited and have policy enforced at resource create. As a result, we’re introducing the Kubernetes Policy Controller, which allows you to enforce custom semantic rules on objects during create, update, and delete operations without recompiling or reconfiguring the Kubernetes API.

Read on to learn more about both new projects.

Achieving scale-to-zero workloads on Kubernetes

Virtual Kubelet has made it possible to dramatically change the auto-scaling properties of a Kubernetes cluster. Depending on the provider plugin, workloads can be scaled using Kubernetes native methods, like the Horizonal Pod Autoscaler, without having to provision additional VM-based nodes or even use a cluster-autoscaler. With Virtual Kubelet enabled auto-scaling, we saw an opportunity to further optimize workload availability and cost by enabling the scale-to-zero workloads.

Introducing Osiris, scale-to-zero infrastructure for Kubernetes

Today we’re excited to announce a project called Osiris, which helps users scale their workloads to zero. Osiris enables greater resource efficiency within a Kubernetes cluster by allowing idling workloads to automatically scale-to-zero and allowing scaled-to-zero workloads to be automatically re-activated on-demand by inbound requests.

How it works

For Osiris-enabled deployments, Osiris automatically instruments application pods. When Osiris determines that a deployment’s pods are idle, Osiris scales the deployment to zero replicas.

Once an Osiris-enabled deployment is scaled to zero, Osiris injects service endpoints that wait for that service to receive traffic. If traffic is destined for that service, Osiris will scale the deployment back up to the minimum number of replicas and forward the request.

How does this affect autoscaling?

Osiris can be used to realize the full potential of Virtual Kubelet powered workloads by providing a lightweight infrastructure to enable workloads to scale to zero. When coupled with micro-billed container platforms this means that you will only have to pay for your services when they have traffic to process.

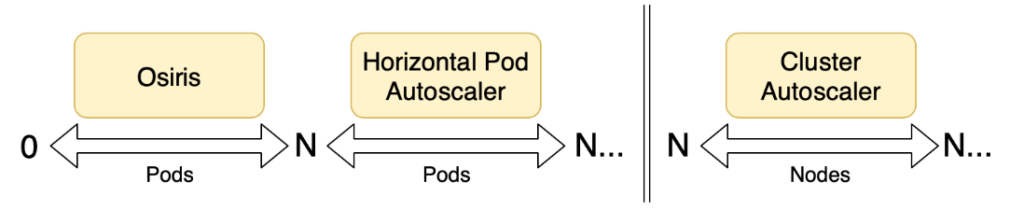

Osiris is designed to work alongside the Kubernetes Horizontal Pod Autoscaler and it is not meant to replace it. Osiris will simply scale your deployment down to zero and back to the minimum number of replicas. All other scaling decisions may be delegated to an HPA, if desired.

How do I get connected?

Looking to learn more about Osiris? Please visit the Osiris Github repository to learn how to get Osiris setup on your cluster today. We are eager to hear your opinions on the concept of scaling to zero in Kubernetes.

Answering Kubernetes workload compliance

Throughout our journey enabling users to move their workloads to Kubernetes we’ve frequently been asked, “How do we make sure Kubernetes resources conform to our internal policies and procedures?” Every organization has its rules, whether it be that each resource needs to be labelled a specific way or to only use images from specific container repositories. Some of these rules or policies are essential to meet governance or legal requirements, and may be based on learning from past experiences.

These policies need to be enforced in an automated fashion, at create time, to ensure compliance. Resources that are policy-enabled make the organization agile and are essential for long-term success as they are more adaptable. With automated policies, violations and conflicts can be discovered consistently, as they are not prone to human error.

Introducing Kubernetes Policy Controller

Kubernetes Policy Controller allows enforcement of custom semantic rules on objects during create, update, and delete operations without recompiling or reconfiguring the Kubernetes API server. The controller is backed by the Open Policy Agent (OPA), which is a lightweight, general-purpose policy engine for cloud-native environments.

How it works

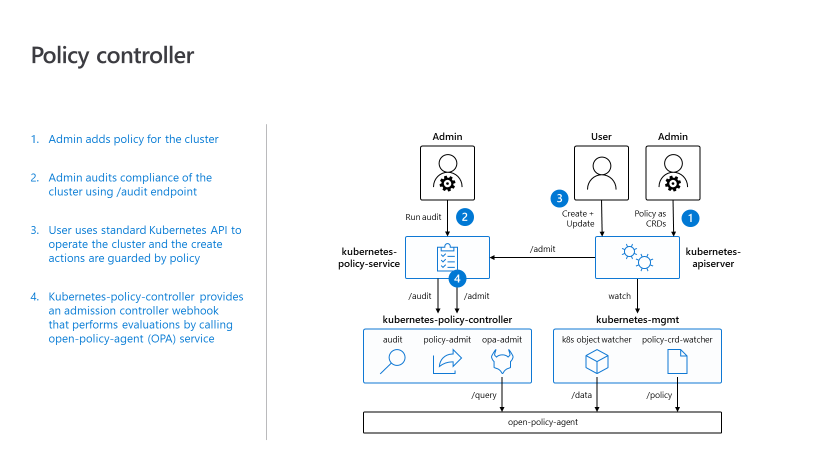

The Kubernetes Policy Controller is a mutating and a validating webhook that gets called for matching Kubernetes API server requests by the admission controller, allowing cluster operators to easily enable and enforce policies for their clusters. The Kubernetes Policy Controller has two modes of operation:

- Validation: “all resources R in namespace N are tagged with annotation A”

- Mutation: “before a resource R in namespace N is created tag it with tag T”

These compliance policies are enforced during Kubernetes create, read, update, and delete operations. Kubernetes compliance is enforced at the “runtime” via tools such as network policy and pod security policy. The Kubernetes Policy Controller extends the compliance enforcement at a “create” event and not at a “run“ event. The Kubernetes Policy Controller uses Open Policy Agent (OPA), a policy engine for Cloud Native environments hosted by CNCF, as a sandbox-level project.

We are taking steps to make sure that your enterprise-level deployments are secure and can be audited at a moment’s notice. You can try out the controller here.

How do I get connected?

We are on a journey to make policy enforcement on Kubernetes clusters simple and reliable. Try out the Kubernetes Policy Controller, contribute to writing policies, and share your scenarios and ideas! To be sure, there is a lot more to do here, and we look forward to working together to get it done!

Hope to see you on GitHub!