Scikit-learn is one of the most useful libraries for general machine learning in Python. To minimize the cost of deployment and avoid discrepancies, deploying scikit-learn models to production usually leverages Docker containers and pickle, the object serialization module of the Python standard library. Docker is a good way to create consistent environments and pickle saves and restores models with ease.

However, there are some limitations when going this route:

- Users may not be able to load an old model saved with pickle when upgrading the version of scikit-learn. This limitation requires putting in place additional infrastructure to be able to retrain the model from a snapshot of the original training data, using the new version of Scikit-learn and its dependencies.

- Scikit-learn is not optimized for performing predictions for one observation at a time, a common situation in web or mobile application scenarios. Like other machine learning frameworks, it is mainly designed for batch predictions. While the individual prediction latency of Scikit-learn estimators could be improved in the future, the Scikit-learn developers acknowledge that it wasn’t always much of a priority in the past.

- Some deployment targets (e.g., mobile or embedded devices) do not support Docker or Python or impose a specific runtime environment, such as .NET or the Java Virtual Machine.

- Scikit-learn and its dependencies (Python, numpy scipy) impose a large memory and storage overhead: at least 200 MB in memory usage, before loading the trained model and even more on the disk. While this is generally not a problem at model training time, this can be a blocker when deploying the prediction function of a single trained model to the target platform.

With framework interoperability and backward compatibility, the resource-efficient and high-performance ONNX Runtime can help address these limitations. This blog post introduces how to operationalize scikit-learn with ONNX, sklearn-onnx, and ONNX Runtime.

ONNX Runtime

ONNX (Open Neural Network Exchange) is an open standard format for representing the prediction function of trained machine learning models. Models trained from various training frameworks can be exported to ONNX. Sklearn-onnx is the dedicated conversion tool for converting Scikit-learn models to ONNX.

ONNX Runtime is a high-performance inference engine for both traditional machine learning (ML) and deep neural network (DNN) models. ONNX Runtime was open sourced by Microsoft in 2018. It is compatible with various popular frameworks, such as scikit-learn, Keras, TensorFlow, PyTorch, and others. ONNX Runtime can perform inference for any prediction function converted to the ONNX format.

ONNX Runtime is backward compatible with all the operators in the ONNX specification. Newer versions of ONNX Runtime support all models that worked with the prior version. By offering APIs covering most common languages including C, C++, C#, Python, Java, and JavaScript, ONNX Runtime can be easily plugged into an existing serving stack. With cross-platform support for Linux, Windows, Mac, iOS, and Android, you can run your models with ONNX Runtime across different operating systems with minimum effort, improving engineering efficiency to innovate faster.

Scikit-learn to ONNX conversion

If you are interested in performing high-performance inference with ONNX Runtime for a given scikit-learn model, here are the steps:

- Train a model with or load a pre-trained model from Scikit-learn.

- Convert the model from Scikit-learn to ONNX format using the sklearn-onnx tool.

- Run the converted model with ONNX Runtime on the target platform of your choice.

Here is a tutorial to convert an end-to-end flow: Train and deploy a scikit-learn pipeline.

A pipeline can be exported to ONNX only when every step can. Most of the numerical models are now supported in sklearn-onnx. There are also some restrictions:

- Sparse data is not supported yet. Therefore, it is still difficult to convert models handling text features where sparse vectors play an important role.

- ONNX still offers limited options to perform iterative numerical optimization at inference time. As a result, sklearn-onnx does not support models such as NMF or LDA yet.

In addition to improving the model coverage, sklearn-onnx also extends its API to allow users to register any additional converter or even overwrite existing ones as custom transformers. The tutorial named Implement a new converter demonstrates how to convert a pipeline that includes an unsupported model class using a custom converter.

A prediction function may be converted in a way slightly different from the original scikit-learn implementation. It is therefore recommended to always compare the approximate equality of the predictions on a validation set prior to deploying the exported ONNX model. In particular, ONNX Runtime development prioritizes float over double, which is driven by performance for deep learning models. That’s why sklearn-onnx also uses single-precision floating-point values by default. However, in some cases, double precision is required to avoid significant discrepancies, specifically when the prediction computation involves the inverse of a matrix as in GaussianProcessRegressor or a discontinuous function as in Trees. An example named Issues when switching to float shows ways to solve the discrepancies introduced by using limited precision. The ONNX specification will be extended to resolve these discrepancies in the future.

Exceptional speedup on Scikit-learn models with ONNX Runtime

ONNX Runtime includes CPU state-of-the-art implementation for standard machine model predictions. In addition, with pure C++ implementations for both data verification and computation, ONNX Runtime only needs to acquire the GIL to return the output predictions (when called from Python) while scikit-learn needs it for every intermediate result. Therefore, ONNX Runtime is usually significantly faster than scikit-learn. The speed improvement depends on the batch size and the model class and hyper-parameters.

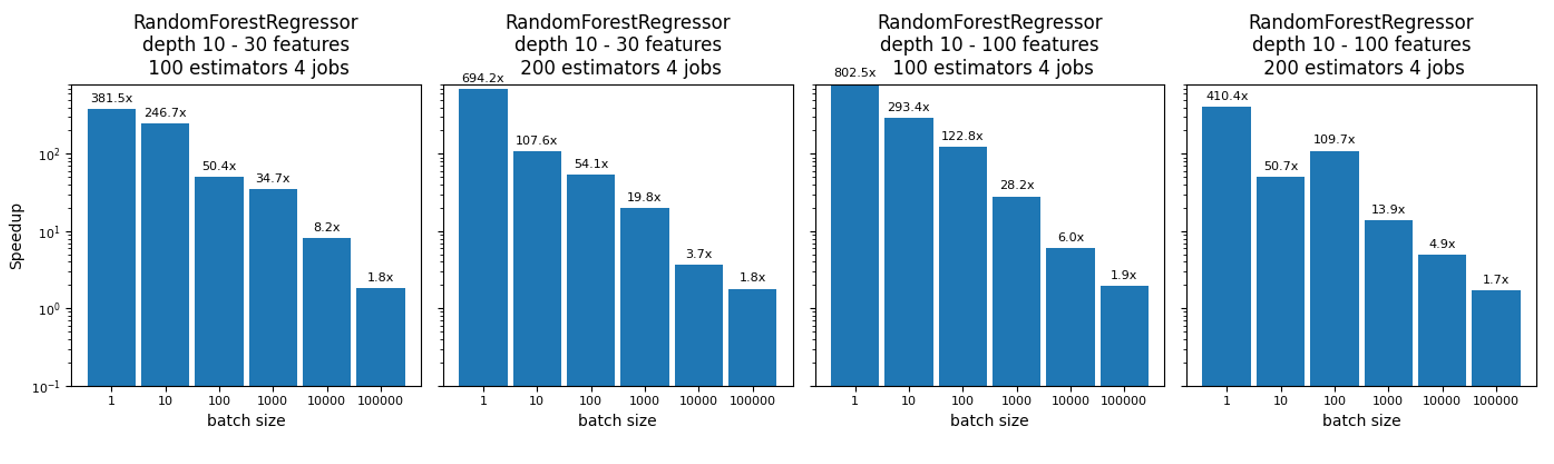

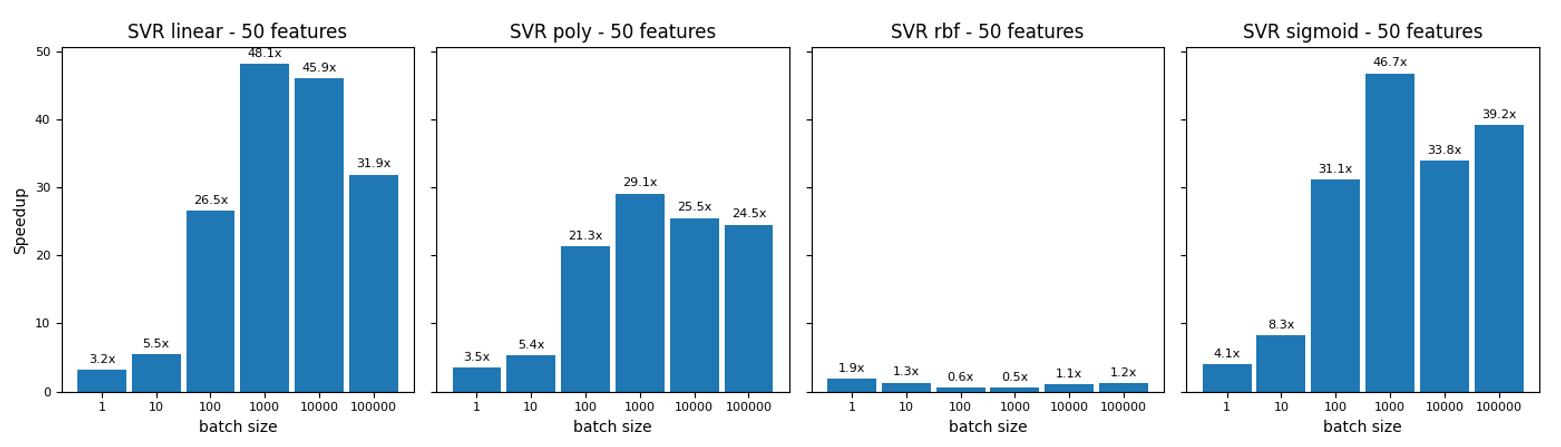

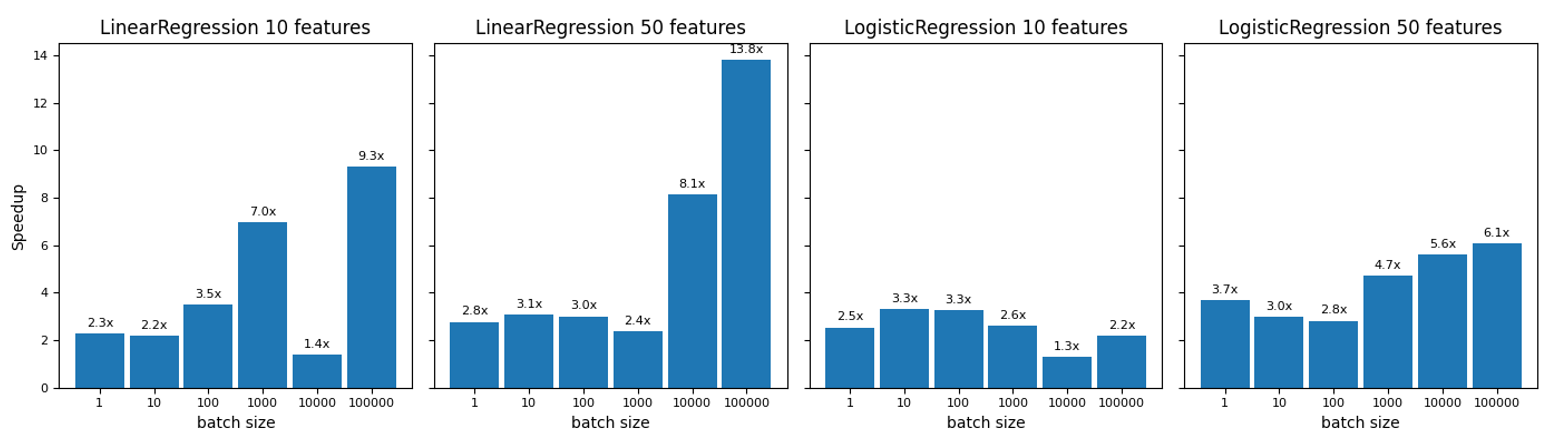

Let us consider a few popular scikit-learn models as examples. Below are performance benchmarks between scikit-learn 23.2 and ONNX Runtime 1.6 on Intel i7-8650U at 1.90GHz with eight logical cores. The y-axis is the model speedup with ONNX Runtime over the prediction speed of the scikit-learn model. The x-axis represents the number of observations for which we compute predictions in a single call (batch size). Different columns highlight the impact of changing some parameter values and the number of features used to train a given model.

- Random Forest: ONNX Runtime runs much faster than scikit-learn with a batch size of one. We saw smaller but still noticeable performance gains for large batch sizes.

- SVM Regression: ONNX Runtime outperforms scikit-learn with 3 function kernels, the small slow down observed for the RBF kernel is under investigation and will be improved in feature releases.

- Linear Regression and Logistic Regression: ONNX Runtime is consistently better than scikit-learn across all the cases we tested.

The performance of RandomForestRegressor has been improved by a factor of five in the latest release of ONNX Runtime (1.6). The performance difference between ONNX Runtime and scikit-learn is constantly monitored. The fastest library helps to find more efficient implementation strategies for the slowest one. The comparison is more relevant on a large batch size as the intermediate steps scikit-learn calls to verify the inputs become insignificant compared to the overall computation time. Note that the poor speed of scikit-learn with a small batch size was reported on the scikit-learn issue tracker and will hopefully be improved in future releases of scikit-learn.

Looking forward

The list of supported models is still growing. This has led to some interesting discussions with core developers of scikit-learn. We will continue optimizing the performance in ONNX Runtime given this is a hard challenge and scikit-learn also continues to improve. This story of scikit-learn and ONNX began two years ago when Microsoft became a sponsor of the Scikit-learn consortium @ Inria Foundation and it is still going on.

Questions or feedback? Please let us know in the comments below.