In our previous blog, we spoke about the progress we have made for the eBPF for Windows project. A key goal for us has been to meet developers where they are. As a result, enabling eBPF programs written for Linux to run on top of the eBPF for Windows platform is very important to us. In this update, we want to talk about our learning and observations using an application that was fundamentally written for Linux.

What better way to demonstrate this than a very relevant real-world use case. With help from Cilium devs, we have been working to get the Cilium Layer-4 Load Balancer (L4LB) eBPF program running on eBPF for Windows.

Leading up to this, we have focused on building out the basic infrastructure for various map types, helpers, and hooks.

Identifying the cross-platform functionality

The Cilium load balancer is very rich in functionality, and we identified a subset of the functionality for this work that provides L4 load balancing.

Thanks to the devs on the Cilium project, the L4LB code is open sourced. We started by doing a deep dive into how the application is structured, the division of functionality between the user-mode application and the eBPF program that is loaded in the kernel, what eBPF hooks and helpers are used, and for what purposes.

User mode application

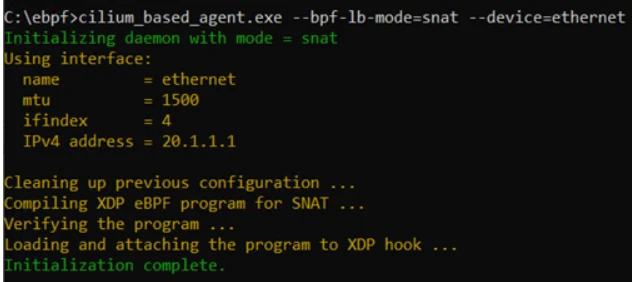

Naturally, the application was developed on Linux and we quickly found that the user-mode application uses libraries such as netlink that are Linux specific. As a result, we decided to write a Cilium agent-inspired user mode app that uses the Windows SDK for some of the networking APIs, and the eBPF for Windows platform for driving the eBPF programs.

The application creates the same types of maps that the Cilium agent does and loads the eBPF program that implements the load balancing functionality.

Hooks and helpers

Next, we made a list of the hooks and helpers used by the L4LB functionality. We found that, at a high level, Cilium has a standalone load balancer that uses eXpress Data Path (XDP) and socket/Traffic Control subsystem (TC) hooks. For the L4LB the XDP hook is particularly interesting since it allows executing BPF programs directly inside the network driver’s receive path as early as possible in order to process a high volume of inbound packets. Socket/TC hooks are used for optional health probing of backends. For the purposes of the demo, we decided to focus on the datapath and started building out the hooks, helpers, and maps for this.

We had already implemented an XDP hook based on the Windows Filtering Platform (WFP) for handling layer 2 traffic and enhanced that for this work.

The table below shows the hooks and helpers we needed to add for this purpose with the entries having an asterisk (*) found to be either optional or something we worked around. For example, bpf_fib_lookup() was a helper we knew would provide the next hop-based information for sending the packets. For the demo, we used existing APIs from the Windows SDK to get the layer 2 data and populated it in a map for the eBPF program to use.

| Category | Linux APIs |

| Generic Helpers | bpf_map_lookup/update/delete_elem |

| bpf_tail_call | |

| bpf_get_prandom_u32 | |

| bpf_get_smp_processor_id | |

| bpf_csum_diff | |

| bpf_fib_lookup* | |

| bpf_ktime_get_ns | |

| XDP Helpers | bpf_xdp_adjust_meta* |

| bpf_xdp_adjust_tail* | |

| XDP action | XDP_TX (hairpin) |

Maps

We enhanced the support for additional types of maps from the following list:

| Category | Linux APIs |

| Map types | bpf_map_type_hash |

| bpf_map_type_percpu_array | |

| bpf_map_type_percpu_hash | |

| bpf_map_type_prog_array | |

| bpf_map_type_hash_of_maps | |

| bpf_map_type_lru_hash | |

| bpf_map_type_lpm_trie | |

| bpf_map_type_perf_event_array* |

Verifier functionality enhancements

Just as we were making progress, we started running into verification failures running the PREVAIL verifier on the Cilium L4LB code. Digging deeper, we noticed that the Cilium L4LB program embedded Linux-specific assembly rather than being compiled from C, and the assembly was apparently due to needing workarounds for Linux kernel verifier artifacts. That assembly was failing PREVAIL verifier verification, but the original C code was fine, as a result we went back to using C instead of assembly for such code paths.

We then found two common cases where additional functionality was needed in the PREVAIL verifier to handle such real-world programs. We created patches for PREVAIL and contributed them upstream.

Getting it all to work together

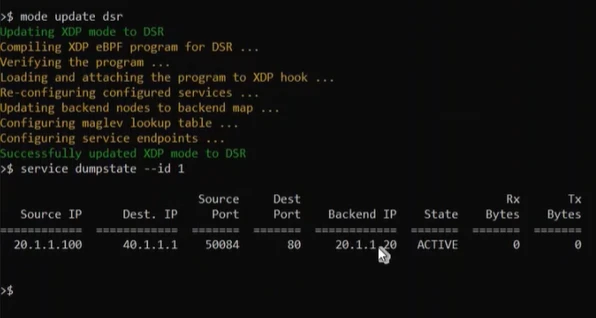

Finally, we put all the pieces together to run both the Direct Server Return (DSR) and Source NAT (SNAT) functionality of the load balancer.

After commenting out functionality we identified as optional (as far as a demo is concerned) and handling the aforementioned cases of assembly instructions, we were able to get around 96 percent of the eBPF code that was written for Linux to run on top of the eBPF for Windows platform. This includes eBPF code written for Maglev hashing, NAT engine, connection tracker, packet handling code, and so on. We think this is very encouraging and exciting because it demonstrates the cross-platform value of eBPF.

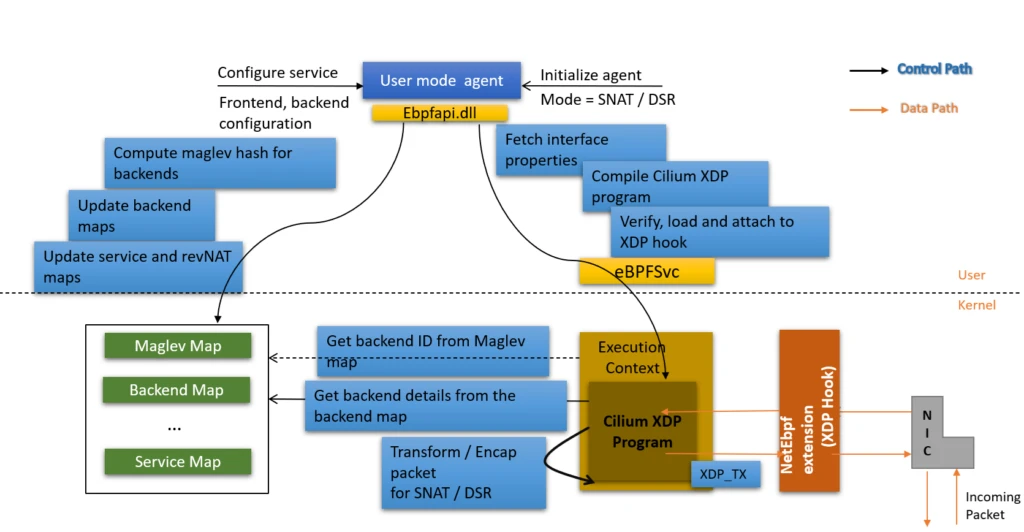

Software Architecture

Figure 1 illustrates the software architecture of the demo load balancer. The code consists of a user-mode agent inspired by the Cilium L4LB’s agent. This reads several input properties such as interface details and accepts the desired service details. For the load balancer part, we ended up supporting both DSR and SNAT and the agent accepts this as a service mode. In addition to this, the agent receives the virtual IP (VIP) and the backend Direct IP (DIP) addresses. The agent computes the Maglev hash for the backends and then sets up a number of maps required for the BPF program such as the Maglev map, the backend map, the service map, and more. The agent calls the eBPF APIs through which the BPF L4LB program is verified and then loaded at the XDP hook.

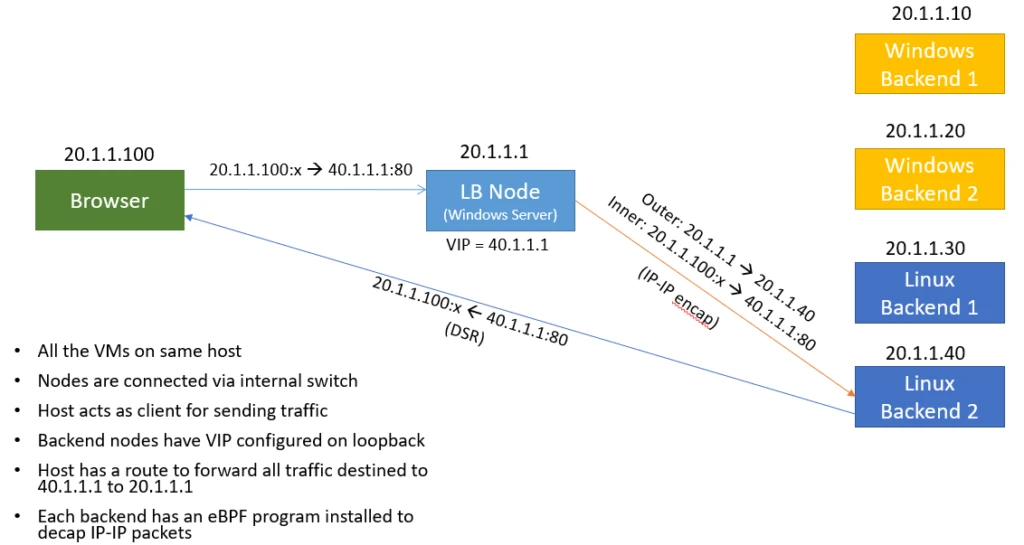

The demo topology consists of five virtual machines (VMs)—one load balancer VM and four backend DIP VMs, and a browser session representing the client. We hosted these VMs on the same server for the sake of simplicity, although this should not matter. The VMs are interconnected using an internal VMSwitch on the server. Figure 3 shows the topology of the setup and the packet path taken in the DSR mode.

Demo Topology DSR

The client initiates a web request with a source address of 20.1.1.100 to the VIP address 40.1.1.1. In the SNAT mode, the load balancer translates the IP header in both the inbound and outbound directions. The connection state is tracked using the connection tracker map.

In the DSR mode, the load balancer encapsulates the incoming request in an outer IP header and sends it to the selected backend VM. The backend VM therefore must decapsulate the outer IP header and provide it to the webserver. We chose two backend VMs to run Windows and two VMs to run Linux. For the Windows backend, we used an XDP program to handle the decapsulation while the Linux VM used a TC-based program.

All the demo code described above is open sourced is available on GitHub and can be used by anyone to play with or extend as they wish.

What next?

We keep the list of open work items in our public GitHub repository. We will continue to be application-centric with a focus on hooks and helpers for better network observability. Maximizing eBPF source code compatibility will continue to be an important goal for us as we build out the platform to help even more applications be deployed on top of eBPF for Windows. Other than this, we are actively discussing with the community how to accept signed eBPF programs.

For all our work, we invite collaborations from the community to contribute to this work be it code, design, or bug reports.

How to collaborate

We welcome all contributions and suggestions and look forward to continued collaboration with the rest of the eBPF developer community on this exciting journey. You can reach us on our Slack channel, through our GitHub discussions, or join us at our weekly public Zoom meetings Mondays at 8:30 AM Pacific Time.