Deep learning models are everywhere without us even realizing it. The number of AI use cases have been increasing exponentially with the rapid development of new algorithms, cheaper compute, and greater access to data. Almost every industry has deep learning applications, from healthcare to education to manufacturing, construction, and beyond. Many developers opt to use popular AI Frameworks like PyTorch, which simplifies the process of analyzing predictions, training models, leveraging data, and refining future results.

PyTorch is a machine learning framework used for applications such as computer vision and natural language processing, originally developed by Meta AI and now a part of the Linux Foundation umbrella, under the name of PyTorch Foundation. PyTorch has a powerful, TorchScript-based implementation that transforms the model from eager to graph mode for deployment scenarios.

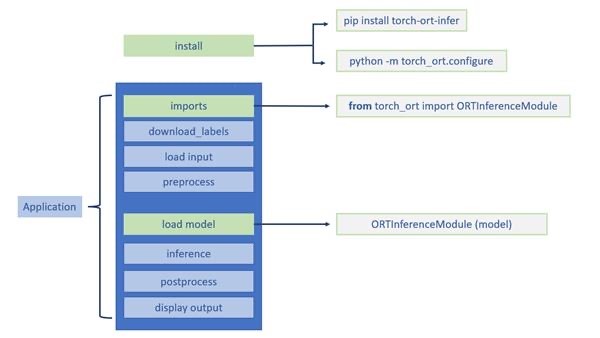

One of the biggest challenges PyTorch developers face in their deep learning projects is model optimization and performance. Oftentimes, the question arises: How can I improve the performance of my PyTorch models? As you might have read in our previous blog, Intel® and Microsoft have joined hands to tackle this problem with OpenVINO™ Integration with Torch-ORT. Initially, Microsoft had released Torch-ORT, which focused on accelerating PyTorch model training using ONNX Runtime. Recently, this capability was extended to accelerate PyTorch model inferencing by using the OpenVINO™ toolkit on Intel® central processing unit (CPU), graphical processing unit (GPU), and video processing unit (VPU) with just two lines of code.

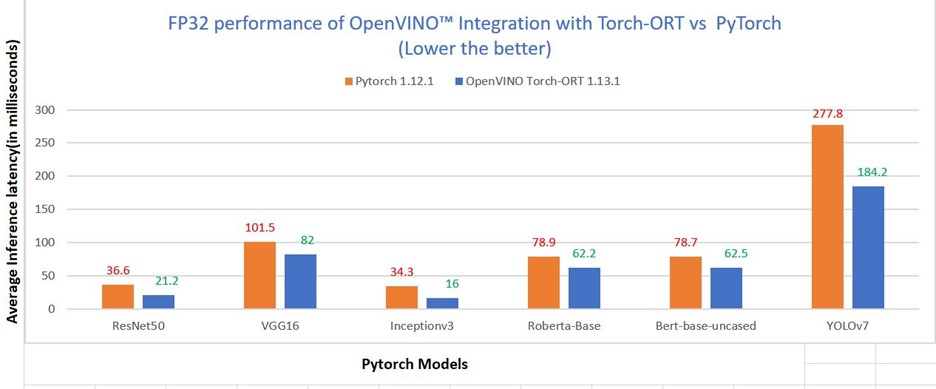

By adding just two lines of code, we achieved 2.15 times faster inference for PyTorch Inception V3 model on an 11th Gen Intel® Core™ i7 processor1. In addition to Inception V3, we also see performance gains for many popular PyTorch models such as ResNet50, Roberta-Base, and more. Currently, OpenVINO™ Integration with Torch-ORT supports over 120 PyTorch models from popular model zoo’s, like Torchvision and Hugging Face.

Features

OpenVINO™ Integration with Torch-ORT introduces the following features:

- Inline conversion of static/dynamic input shape models

- Graph partitioning

- Support for INT8 models

- Dockerfiles/Docker Containers

Inline conversion of static/dynamic input shape models

OpenVINO™ Integration with Torch-ORT performs inferencing of PyTorch models by converting these models to ONNX inline and subsequently performing inference with OpenVINO™ Execution Provider. Currently, both static and dynamic input shape models are supported with OpenVINO™ Integration with Torch-ORT. You also have the ability to save the inline exported ONNX model using the DebugOptions API.

Graph partitioning

OpenVINO™ Integration with Torch-ORT supports many PyTorch models by leveraging the existing graph partitioning feature from ONNX Runtime. With this feature, the input model graph is divided into subgraphs depending on the operators supported by OpenVINO and the OpenVINO-compatible subgraphs run using OpenVINO™ Execution Provider and unsupported operators fall back to MLAS CPU Execution Provider.

Support for INT8 models

OpenVINO™ Integration with Torch-ORT extends the support for lower precision inference through post-training quantization (PTQ) technique. Using PTQ, developers can quantize their PyTorch models with Neural Network Compression Framework (NNCF) and then run inferencing with OpenVINO™ Integration with Torch-ORT. Note: Currently, our INT8 model support is in the early stages, only including ResNet50 and MobileNetv2. We are continuously expanding our INT8 model coverage.

Docker Containers

You can now use OpenVINO™ Integration with Torch-ORT on Mac OS and Windows OS through Docker. Pre-built Docker images are readily available on Docker Hub for your convenience. With a simple docker pull, you will now be able to unleash the key to accelerating performance of PyTorch models. To build the docker image yourself, you can also find dockerfiles readily available on Github.

Customer story

Roboflow—Roboflow empowers ISVs to build their own computer vision applications and enables hundreds of thousands of developers with a rich catalog of services, models, and frameworks to further optimize their AI workloads on a variety of different Intel® hardware. An easy-to-use developer toolkit to accelerate models, properly integrated with AI frameworks, such as OpenVINO™ integration with Torch-ORT provides the best of both worlds—an increase in inference speed as well as the ability to reuse already created AI application code with minimal changes. The Roboflow team has showcased a case study that demonstrates performance gains with OpenVINO™ Integration with Torch-ORT as compared to Native PyTorch for YOLOv7 model on Intel® CPU. The Roboflow team is continuing to actively test OpenVINO™ integration with Torch-ORT with the goal of enabling PyTorch developers in the Roboflow Community.

Try it out

Try out OpenVINO™ Integration with Torch-ORT through a collection of Jupyter Notebooks. Through these sample tutorials, you will see how to install OpenVINO™ Integration with Torch-ORT and accelerate performance for PyTorch models with just two additional lines of code. Stay in the PyTorch framework and leverage OpenVINO™ optimizations—it doesn’t get much easier than this.

Learn more

Here is a list of resources to help you learn more:

Notes:

1Framework configuration: ONNXRuntime 1.13.1

Application configuration: torch_ort_infer 1.13.1, python timeit module for timing inference of models

Input: Classification models: torch.Tensor; NLP models: Masked sentence; OD model: .jpg image

Application Metric: Average Inference latency for 100 iterations calculated after 15 warmup iterations

Platform: Tiger Lake

Number of Nodes: 1 Numa Node

Number of Sockets: 1

CPU or Accelerator: 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz

Cores/socket, Threads/socket or EU/socket: 4, 2 Threads/Core

ucode: 0xa4

HT: Enabled

Turbo: Enabled

BIOS Version: TNTGLV57.9026.2020.0916.1340

System DDR Mem Config: slots / cap / run-speed: 2/32 GB/2667 MT/s

Total Memory/Node (DDR+DCPMM): 64GB

Storage – boot: Sabrent Rocket 4.0 500GB – size: 465.8G

OS: Ubuntu 20.04.4 LTS

Kernel: 5.15.0-1010-intel-iotg

Notices and disclaimers:

- Performance varies by use, configuration, and other factors. Learn more at www.Intel.com/PerformanceIndex.

- Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

- Your costs and results may vary.

- Intel technologies may require enabled hardware, software, or service activation.

- Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from a course of performance, course of dealing, or usage in trade.

- Results have been estimated or simulated.

- © Intel Corporation. Intel, the Intel logo, OpenVINO™, and the OpenVINO™ logo are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.