As the requirements and software surrounding Kubernetes clusters grow along with the required number of clusters, the administrative overhead becomes overwhelming and unsustainable without an appropriate architecture and supportive tooling. This is especially true running Kubernetes at scale, having hundreds or thousands of clusters. Let’s look at how to ease cluster lifecycle management from a few to many clusters and answer common questions which come up. We’ll look at how to leverage the GitOps pattern to superpower Cluster API, and we’ll recommend an approach for getting started and learning more.

Cluster API (CAPI) enables consistent and repeatable Kubernetes cluster deployments and lifecycle management across more than 30 different infrastructure environments such as the Cluster API provider for Azure (CAPZ). CAPI enables provisioning Kubernetes clusters directly on top of the metal, virtual, or cloud infrastructure as a service (IaaS) infrastructure where the customer operates the control plane (also known as “self-managed”) as well as managed clusters such as Microsoft Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), or Amazon Elastic Kubernetes Service (EKS) where the control plane is managed by the provider.

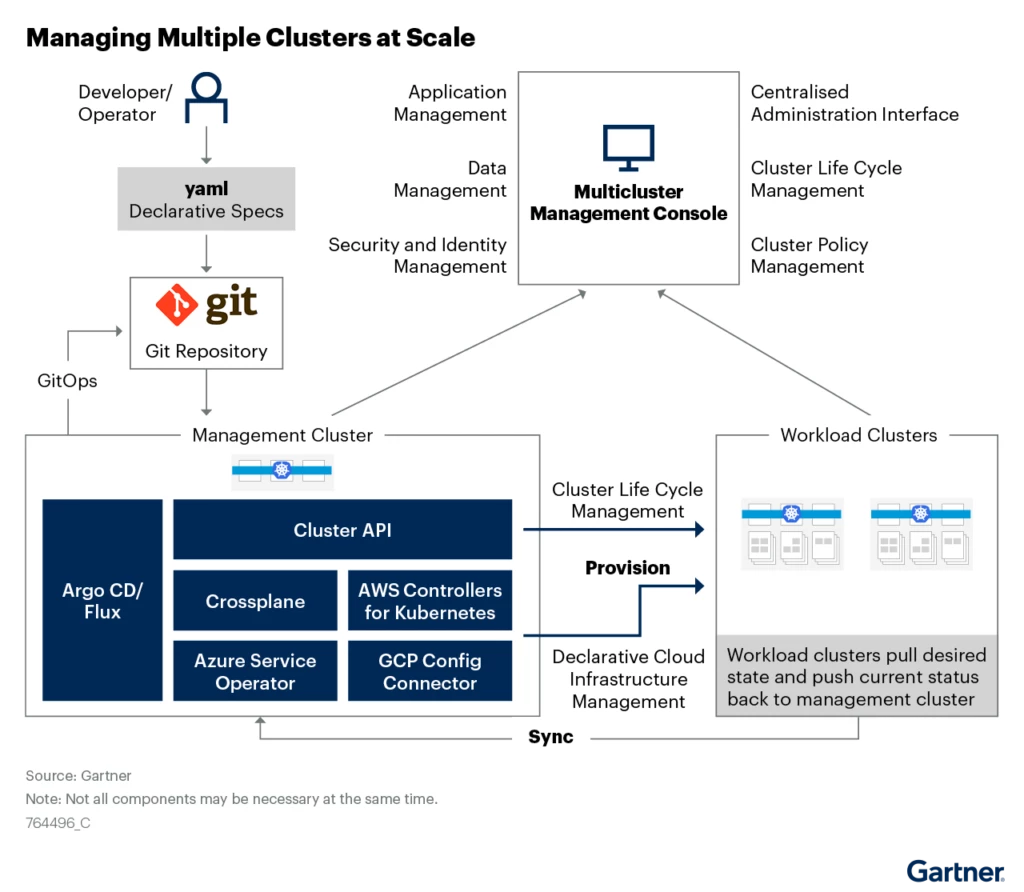

One of the major benefits of CAPI is the ability to provision Kubernetes clusters using Kubernetes itself through the custom resource definitions (CRDs) installed on a management cluster. The management cluster is in a regular reconciliation loop to ensure that the workload cluster is in the state specified in the CAPI CRD definitions. When CAPI is combined with additional tooling such as GitOps providers like Flux or ArgoCD, it enables an extremely powerful combination to manage the entire lifecycle of the clusters at scale. This pattern can also be seen in a Gartner® report How to Scale DevOps Workflows in Multicluster Kubernetes Environments.1

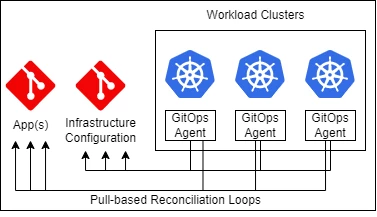

After a small CAPI cluster, YAML definition merges on the synchronized git repository, a new cluster can be quickly instantiated. Furthermore, the workload cluster can also be fully hydrated with all dependencies and applications using GitOps agents on the workload clusters being synchronized to one or more other git repositories (example shown on the below diagram).

Within a small number of minutes, any number of new Kubernetes clusters can be added which will have everything needed to function and be in a reconciliation loop for the cluster configuration itself and any workloads on the cluster.

The other big benefit this pattern provides is a foundation for platform engineering in an organization. It makes it easier to do things like self-service development environments and manage the overall lifecycle of the clusters. The “Multicluster Management Console” as shown on the top diagram and surrounding capabilities vary based on the supporting products and tooling ecosystem.

Now that there is a fundamental understanding of how this pattern can help, let’s surface some challenges with the traditional architectural pattern of using infrastructure as code (IaC) such as Terraform to provision clusters and CI/CD pipeline orchestration to push Kubernetes workload applications and infrastructure configurations to the clusters.

The biggest challenge is that IaC combined with CI/CD pipelines without GitOps doesn’t scale. Without GitOps to hydrate the cluster, there is likely a CI/CD pipeline for every cluster configuration, app, and then multiplied per cluster. The more clusters added, the more pipeline management explodes. Additionally, any time clusters need to be added or removed, there are new operations for updating the connection to each cluster in a pipeline orchestration engine.

In contrast, the GitOps pattern enables shared configurations and app definitions to apply to N clusters, commonly using Kustomize or Helm overlay patterns and incorporating more concepts like template features. The GitOps agent is what typically gets installed on the cluster and has permission to create the changes found in git, keeping management scalable as we add clusters, which reduces the need to configure pipelines to push changes to the clusters.

Also, Terraform by itself doesn’t contain an ongoing desired-state reconciliation loop, so the configuration only gets applied when the Terraform code (HCL *.tf files) gets applied. It is possible to use Terraform with GitOps operators (see Weave tf-controller). The challenge with this approach is being dependent on the operator code which may be a paid feature to essentially `terraform plan` and `terraform apply`. A central Kubernetes management cluster, on the other hand, is designed to regularly reconcile the state of the resources under the management cluster’s control.

Now, let’s take a look at some of the most common questions that come up as organizations consider their cluster management needs.

Are CAPI and CAPZ production ready?

CAPI has been adopted by many organizations at the 1.0 generally available in October 2021 and recently released the 1.4.0 release. CAPZ released 1.8.0 on March 8, 2023 which graduated provisioning Managed Clusters to GA. Deploying AKS using CAPZ saves the cost and management overhead of outsourcing the control plane nodes and combined with the pattern described in this article enables all these advantages at the production scale. It’s important to note CAPI was originally designed for self-managed clusters, so the equivalent Managed Clusters implementation may or may not be GA depending on the cloud provider.

What if my Kubernetes cluster needs additional infrastructure from the cloud provider?

Several cloud providers enable the provisioning of any cloud resource using this same model, such as with the Azure Service Operator (ASO) implementation. A CRD is installed on the management cluster and then can provide any new Azure cloud resources.

Why use Cluster API to provision Azure Kubernetes Service or any other managed Kubernetes cluster?

Typically, the initial thinking is that a managed cluster shouldn’t really need to have all this orchestration setup. Even with a single cluster, there is going to be a need to use IaC of some kind as a basic best practice and ideally use the GitOps pattern to hydrate the cluster itself. It’s rare, however, that a single cluster is sufficient for any organization—even for the purposes of development, staging, and production. The “managing multiple clusters at scale” pattern above can reduce the administrative overhead for the lifecycle of managed clusters at a large scale.

Should I use Azure Service Operator or CAPZ to provision AKS?

Both are valid options to provision AKS clusters. The benefit of using CAPZ is it has several elements of testing to ensure that provisioning this specific type of IaC configuration for an AKS cluster will work. Also, it enables a consistent CAPI cluster YAML definition across cloud providers. The advantage of ASO is that it enables provisioning of whatever features are available in AKS the moment it comes out, whereas today CAPZ needs time to add and test available AKS features to fit into the requirements needed to be consistent with the cluster API framework. CAPZ does bi-weekly patch releases and major releases every two months.

Next steps

Learn more about Microsoft Azure Kubernetes Service.

Want to learn about how to utilize GitOps to hydrate clusters? Check out this tutorial on how to deploy applications with Flux v2 on AKS.

Interested in utilizing CAPI to automatically provision clusters? Try out the CAPI quickstart guide which will provision a self-managed cluster and also consider the CAPZ Managed Clusters page to provision an AKS cluster.

After trying out GitOps and CAPI, then explore combining these two with this blog post on “How to model your Gitops Environments and Promote Releases between Them” and this sample repository using GitOps with multi-cluster and multi-tenant deployments.

To connect with the community, we welcome you to join the weekly CAPI and CAPZ community calls and engage in the #cluster-api and #cluster-api-azure channels on Kubernetes slack.

1Gartner, How to Scale DevOps Workflows in Multicluster Kubernetes Environments, Manjunath Bhat, Wataru Katsurashima, 2 June 2022.

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.