In this blog post, I’ll show how we packed our Kubernetes microservices app with Helm, running on Azure Container Service, and made them easy to reproduce in various environments.

Shipping microservices as a single piece of block

At RisingStack we use Kubernetes with tens of microservices to provide our Node.js monitoring solution Trace for our SaaS customers.

During the last couple of months, we were asked by many enterprises with strict data compliance requirements to make our product available as a self-hosted service. So we had to find a solution that makes it easy for them to install Trace as a single piece of software and hides the complexity of our infrastructure. It’s challenging because Trace contains many small applications, databases, and settings. We wanted to find a solution that is not only easy to ship but also highly configurable. As Kubernetes is configuration based, we started to look for templating solutions that brought up new challenges. That’s how we found Helm, which provides a powerful templating and package management solution for Kubernetes. Thanks to this process, Trace is now available as a self-hosted Node.js monitoring solution, and you can have the same experience in your own Azure cloud as our SaaS customers.In this blog post, I’ll show how we packed our Kubernetes microservices app with Helm and made them easy to reproduce in various environments.

Kubernetes resource definitions

One of the best features of Kubernetes is its configuration based nature, which allows to create or modify your resources. You can easily set up and manage your components from running containers to load balancers through YAML or JSON files.Kubernetes makes it super easy to reproduce the same thing, but it can be challenging to modify and manage different Docker image tags, secrets and resource limits per different environments.Take a look at the following YAML snippet that creates three running replicas from the metrics-processor container with the same DB_URI environment variable:

apiVersion: apps/v1beta1 kind: Deployment metadata: name: metrics-processor spec: replicas: 3 spec: containers: - name: metrics-processor image: myco/metrics-processor:1.7.9 env: - name: DB_URI value: postgres://my-uri

What would happen if we wanted to ship a different version from our application that connects to a separate database? How about introducing some templating?

For your production application you would probably use Kubernetes Secret resource that expects Base64 encoded strings and makes it even more challenging to dynamically configure them.

Templating challenges

I think we all feel that we need to introduce some kind of templating solution here, but why can it be challenging?First of all, in Kubernetes, some resources depend on each other. For example, a Deployment can use various secrets, or we want to run some migration jobs before we kick off our applications. This means that we need a solution which is capable of managing these dependency graphs, can run our templates in the correct order.Another great challenge comes with managing our configurations and a different version of our templates and variables to update our resources. We really want to avoid the situation when we need to re-create everything to update our Docker image tag only.This is where Helm comes to rescue the day.

Templating with Helm

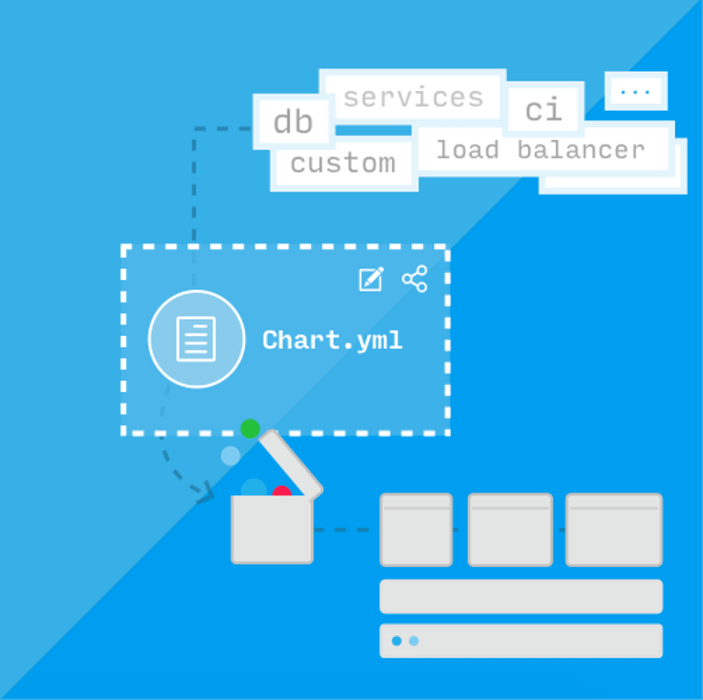

Helm is a tool for managing Kubernetes charts. Charts are packages of pre-configured Kubernetes resources.

Helm is an open source project maintained by the Kubernetes organization. It makes it easy to pack, ship and update Kubernetes resources together as a single package.

One of the best parts of Helm is that it comes with an open-source repository maintained by the community, where you can find hundreds of different pre-packed solutions from databases like MongoDB and Redis, to applications like WordPress and OpenVPN.

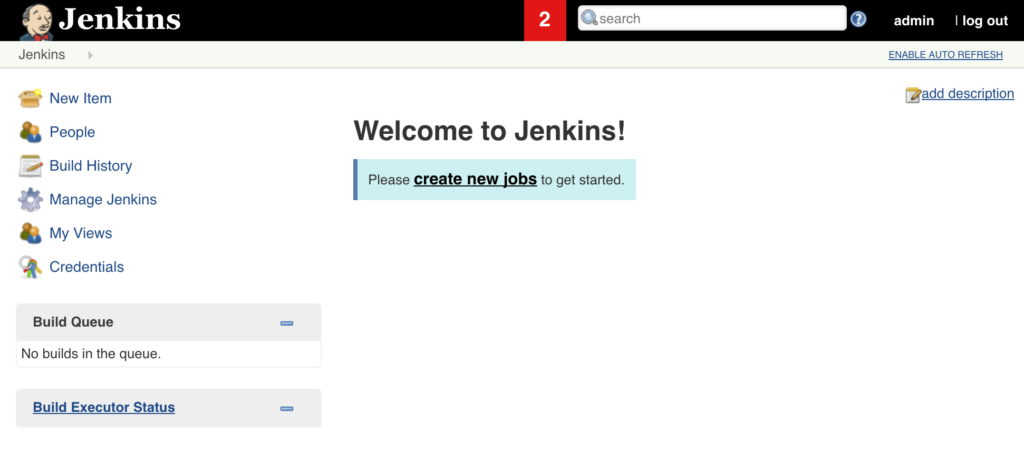

With Helm, you can install complex solutions like a Jenkins master-slave architecture in minutes.

helm install --name my-jenkins stable/Jenkins

Helm doesn’t just provision your Kubernetes resources in the correct order. It also comes with lifecycle hooks, advanced templating, and the concept of sub-charts. For the complete list, I recommend checking out their documentation.

How does Helm work?

Helm is working in a client-server architecture where the Tiller Server is an in-cluster server that interacts with the Helm client, and interfaces with the Kubernetes API server. It is responsible for combining charts and installing Kubernetes resources asked by the client.

While the Helm Client is a command-line client for end users, the client is responsible for communicating with the tiller server.

Helm example on Azure Container Service

In this example, I’ll show how you can install a Jenkins with master-slave settings to Kubernetes with Azure Container Service in minutes.First of all, we need a running Kubernetes cluster. Luckily, Azure Container Service provides a hosted Kubernetes, so I can provision one quickly:

# Provision a new Kubernetes cluster az acs create -n myClusterName -d myDNSPrefix -g myResourceGroup --generate-ssh-keys --orchestrator-type kubernetes # Configure kubectl with the new cluster az acs kubernetes get-credentials --resource-group=myResourceGroup --name=myClusterName

If you don’t have kubectl run: az acs kubernetes install-cl

After a couple of minutes, when our Kubernetes cluster is ready, we can initialize the Helm Tiller:

helm init

The helm init command installs Helm Tiller into the current Kubernetes cluster.

On macOS you can install Helm with brew:

brew install kubernetes-helm, with other platforms check out their installation docs.

After my Helm is ready to accept charts, I can install Jenkins from the official Helm repository:

helm install --name my-ci --set Master.ServiceType=NodePort,Persistence.Enabled=false stable/jenkins

For the sake of simplicity and security, I disabled persistent volume and service exposing in this example.

That’s it! To visit our freshly installed Jenkins, follow the instructions in the Helm install output or use the kubectl pot-forward <pod-name> 8080 terminal command.

In a really short amount of time, we just provisioned a Jenkins master into our cluster, which also runs its slaves in Kubernetes. It is also able to manage our other Kubernetes resources so we can immediately start to build CI pipelines.

In a really short amount of time, we just provisioned a Jenkins master into our cluster, which also runs its slaves in Kubernetes. It is also able to manage our other Kubernetes resources so we can immediately start to build CI pipelines.

Trace as a Helm chart

With Helm, we were able to turn our applications, configurations, autoscaling settings and load balancers into a Helm chart that contains smaller sub-charts and ship it as one piece of the chart. It makes possible to easily reproduce our whole infrastructure in a couple of minutes.

We’re not only using this to ship the self-hosted version of Trace, but we can also easily run multiple test environments or even move/copy our entire SaaS infrastructure between multiple cloud providers. We only need a running Kubernetes cluster.

Keeping Helm charts in sync

For keeping our charts in sync with our infrastructure, we changed our releasing process to update our Helm repository and modify the chart’s Docker image tag. For this, we created a small service that uses the GitHub API; it is triggered by our CI.

Outro

The popularity of Kubernetes increases rapidly, while hosted cluster solutions are becoming available by cloud providers like Azure. With Helm, you can ship and install complex microservices applications or databases into your Kubernetes cluster.

It was never easier to try out new technologies and ship awesome features.

Questions? Let me know in the comments.