One of the exciting features of the 4th Gen Intel® Xeon® CPU is Intel® Advanced Matrix Extension (AMX). Intel® AMX is an x86 extension that accelerates matrix multiplications common in deep learning (DL) workloads. To take advantage of this for performance acceleration through ONNX Runtime, Intel and Microsoft developed the 8-bit integer matrix multiplication kernel in ONNX Runtime using Intel®AMX instructions, resulting in four times faster performance than 3rd Gen Intel® Xeon® using Intel® DL Boost. This blog explores how ONNX Runtime harnesses Intel® AMX to accelerate performance for the 4th Gen Intel® Xeon® CPUs.

Intel AMX

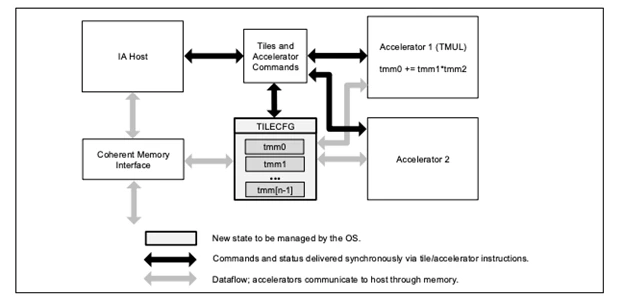

Intel AMX is an x86 extension that operates on matrices. The extension consists of two key components shown in Figure 1: a set of 2D register files called tiles representing sub-arrays of a larger 2D memory image and a set of accelerators called the tile multiplication unit (TMUL) that operate on these tiles. Intel AMX instructions are synchronous with the central processing unit (CPU) instruction stream and tile load/store operations are coherent with the CPU memory operations. Intel AMX instructions can be interleaved with other x86 instructions and can run in parallel with other extensions like Intel® AVX512. For more details on the Intel AMX architecture, refer to Intel® 64 and IA-32 Architectures Optimization Reference Manual.

Figure 1. Intel AMX Architecture.

TMUL comprises of a grid of fused multiply-add (FMA) units that operate on Intel AMX tiles. The matrix multiplication operation in the TMUL instruction computes C[M][N] += A[M][K] * B[K][N]shown in Figure 2.

- A tile can have between 1-16 rows, and 1-MAX_TILE_K columns.

- B tile can have between 1-MAX_TILE_K rows, and 1-16 columns.

- C tile can have between 1-16 rows, and 1-16 columns.

Where MAX_TILE_K=64/sizeof(type_t), where type_t is the type of the data being operated on. So, MAX_TILE_K =64 for (u)int8 data, and MAX_TILE_K=32 for bfloat16 data.

The data type in the output tile is dependent on the data type in the input tile.

- A tiles and B tiles contain data of type type_t, i.e. (u)int8 or bfloat16.

- C tiles contain data of type res_type_t:

- int32 if type_t=(u)int8

- float if type_t=bfloat16

![This figure explains Intel AMX matrix multiplication with max-sized int8 tiles. The matrix multiplication operation in the TMUL instruction computes C[M][N] += A[M][K] * B[K][N]](https://cloudblogs.microsoft.com/opensource/wp-content/uploads/sites/37/2023/09/Picture2.webp)

Figure 2. Intel AMX matrix multiplication with max-sized int8 tiles.

ONNX Runtime and Intel AMX

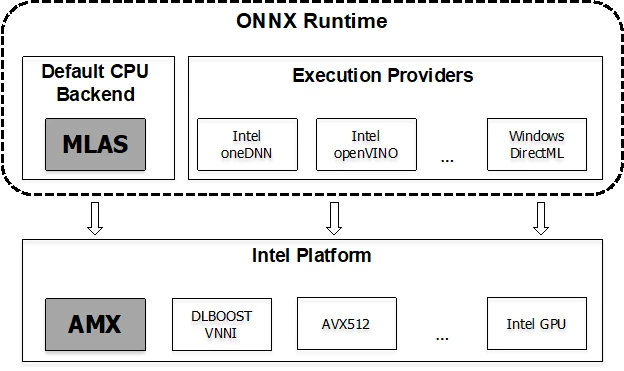

ONNX Runtime stands as an open-source, high-performance machine learning engine. It integrates a range of hardware acceleration techniques designed to enhance performance across diverse hardware platforms. Intel and Microsoft have ongoing collaboration to ensure accelerated performance can be achieved across the Intel hardware ecosystem. Previously, Intel and Microsoft have developed 8-bit integer matrix multiplication and convolution kernels using Intel® DL Boost instructions. These were introduced in the 2nd Gen Intel® Xeon® processor line. With the advent of the latest Intel hardware generation, Intel has partnered with Microsoft to add Intel AMX instructions for 8-bit integer matrix multiplications into the Microsoft Linear Algebra Subroutine (MLAS), the default CPU execution provider in ONNX Runtime. Figure 3 is a high-level view of the execution providers in ONNX Runtime and Intel technology it has been enabled for. The steps to build ONNX Runtime with the CPU execution provider can be found in the ONNX Runtime documentation. Add --build_micro_benchmarks to the build command to build the micro benchmarks. To run QGEMM micro benchmarks, onnxruntime_mlas_benchmark.exe --benchmark_filter=QGEMM*.

Figure 3. ONNX Runtime architecture.

Code listing 1 is a snippet of the ONNX Runtime matrix multiplication kernel optimized using Intel AMX instructions. tile_loadd loads data into a TMM tile. tile_dpbusd executes the matrix multiplication on the TMM tiles. The code was written in intrinsic and machine code. You can get more details of the ONNX Runtime matrix multiplication code from qgemm_kernel_amx.cpp, amx_common.h, and QgemmU8S8KernelAmxCommon.S.

Code Listing 1. ONNX Runtime matrix multiplication using Intel AMX instructions.

const MLAS_GEMM_U8S8_KERNEL_AMX::PackedAType* a_blk = A;

const MLAS_GEMM_U8S8_KERNEL_AMX::PackedAType* a_next_blk = A + PackedCountK * TILE_M;

for (size_t k = PackedCountK; k > 0; k -=TILE_K) {

tile_loadd(TMM0, b_blk, TILE_K);

tile_loadd(TMM2, a_blk, static_cast<int>(PackedCountK));

tile_loadd(TMM1, (void*)(b_blk + PackedCountK * TILE_N), TILE_K);

tile_dpbusd(TMM4, TMM2, TMM0);

tile_dpbusd(TMM6, TMM2, TMM1);

if (m1 > 0){

tile_loadd(TMM3, a_next_blk, static_cast<int>(PackedCountK));

tile_dpbusd(TMM5, TMM3, TMM0);

tile_dpbusd(TMM7, TMM3, TMM1);

}

b_blk += TILE_N * TILE_K;

a_blk += TILE_K;

a_next_blk += TILE_K;

}

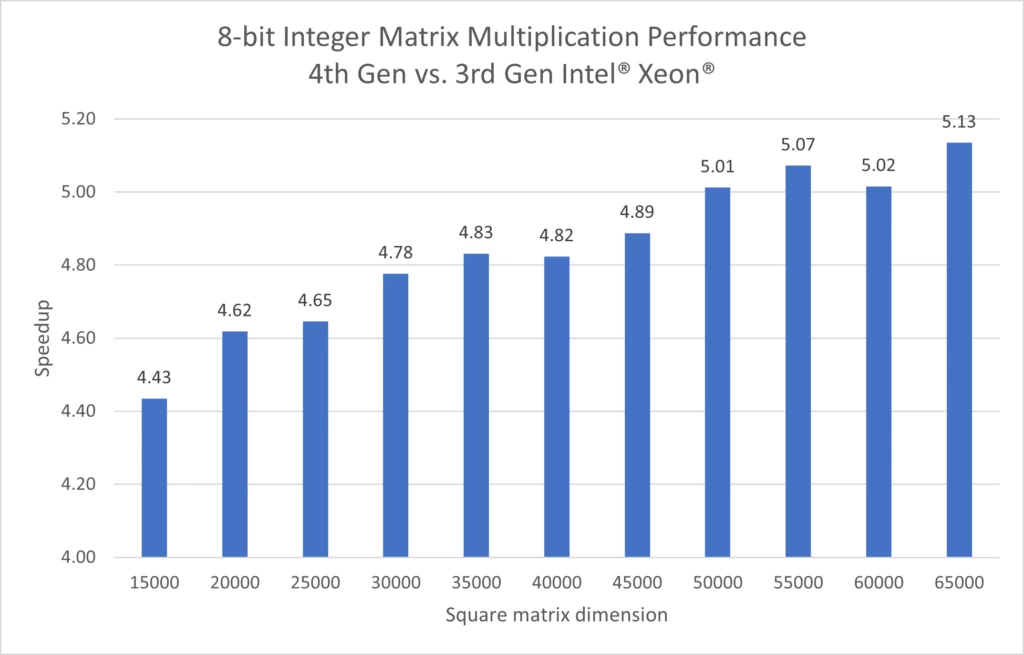

Powered by Intel AMX instructions, the 8-bit integer matrix multiplication in ONNX Runtime on 4th Gen Intel Xeon resulted in more than four times performance gain over 3rd Gen Intel Xeon as shown in Figure 4. In order to realize the performance gains for your model which encompasses matrix multiplications, the initial step involves quantizing your model using the ONNX Runtime quantization. Afterward, you can proceed to execute your model utilizing the ONNX Runtime CPU package on a 4th Gen Intel Xeon processor.

Figure 4. ONNX Runtime 8-bit integer matrix multiplication performance benefit of 4th Gen over 3rd Gen Intel Xeon processors across square matrix shapes.

Looking ahead

ONNX Runtime achieves enhanced performance on 4th Gen Intel Xeon processors thanks to the incorporation of Intel AMX for the implementation of 8-bit integer matrix multiplications. Deep learning (DL) models that contain a substantial number of matrix multiplications can benefit from using ONNX Runtime optimized with Intel AMX instructions. Intel and Microsoft will continue to develop ONNX Runtime for new DL hardware features.

We invite you to try ONNX Runtime for your model accelerations on 4th Gen Intel Xeon processors, and look forward to hearing your feedback and requests, and invite you to submit them through our GitHub projects.

Configurations

Intel Xeon Platinum 8380, 40 cores, HT On, Turbo On, Total Memory 1024GB (16x64GB DDR4 3200 MT/s [3200 MT/s]), Ubuntu 22.04 LTS, 5.15.0-57-generic, GCC 11.3.0, ONNX Runtime v1.14.1

Intel Xeon Platinum 8480+, 56 cores, HT On, Turbo On, Total Memory 1024GB (16x64GB DDR5 4800 MT/s [4800 MT/s]), Ubuntu 22.04 LTS, 5.15.0-57-generic, GCC 11.3.0, ONNX Runtime v1.14.1