Building digital trust in Microsoft Copilot for Dynamics 365 and Power Platform

At Microsoft, trust is the foundation of everything we do. As more organizations adopt Copilot in Dynamics 365 and Power Platform, we are committed to helping everyone use AI responsibly. We do this by ensuring our AI products deliver the highest levels of security, compliance, and privacy in accordance with our Responsible AI Standard—our framework for the safe deployment of AI technologies.

Take a moment to review the latest steps we are taking to help your organization securely deploy Copilot guided by our principles of safety, security, and trust.

Copilot architecture and responsible AI principles in action

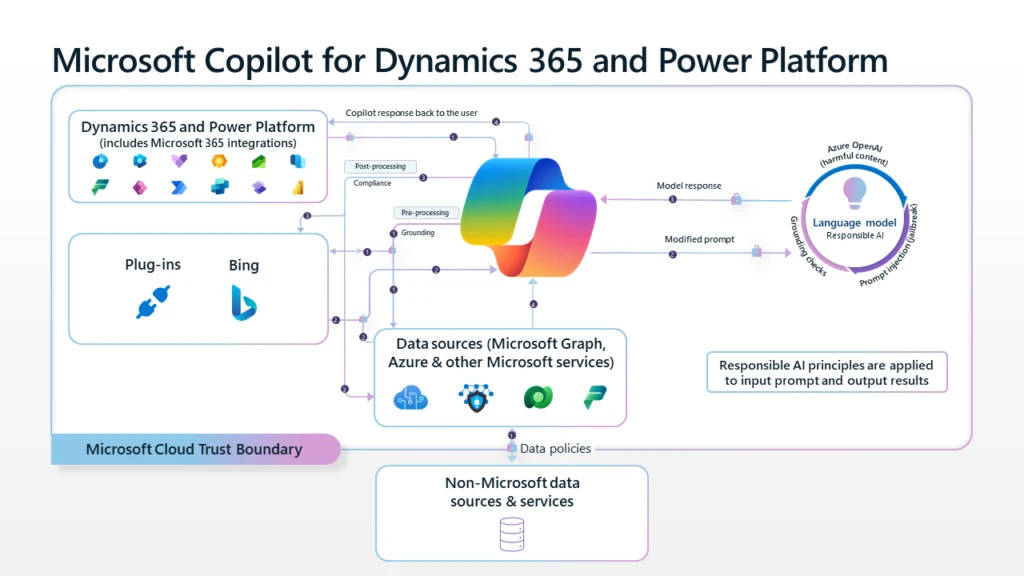

Let’s start with an overview of how Copilot works, how it keeps your business data secure and adheres to privacy requirements, and how it uses generative AI responsibly.

First, Copilot receives a prompt from a user within Dynamics 365 or Power Platform. This prompt could be in the form of a question that the user types into a chat pane, or an action, such as selecting a button labeled “Create an email.”

Copilot processes the prompt using an approach called grounding, which might include retrieving data from Microsoft Dataverse, Microsoft Graph, or external sources. Grounding improves the relevance of the prompt, so the user gets responses that are more appropriate to their task. Interactions with Copilot are specific to each user. This means that Copilot can only access data that the current user has permissions to.

Copilot uses Azure OpenAI Service to access powerful generative AI models that understand natural language inputs and returns a response to the user in the appropriate form. For example, a response might be in the form of a chat message, an email, or a chart. Users should always review the response before taking any action.

How Copilot uses your proprietary business data

Responses are grounded in your business content and business data. Copilot has real-time access to both your content and context to generate answers that are precise, relevant, and anchored in your business data for accuracy and specificity. This real-time access goes through our Dataverse platform (which includes all Power Platform connectors), honoring the data loss prevention and other security policies put in place by your organization. We follow the pattern of Retrieval Augmentation Generation (RAG), which augments the capabilities of language models by adding dynamic grounding data to the prompt that we send to the model. Our system dynamically looks up the relevant data schema using our own embedding indexes and then uses the language models to help translate the user’s question into a query that we can run against the system of record.

We do not use your data to train language models. We believe that our customers’ data is their data in accordance with Microsoft’s data privacy policy. AI-powered language models are trained on a large but limited corpus of data—but prompts, responses, and data accessed through Microsoft Graph and Microsoft services are not used to train Copilot for Dynamics 365 or Power Platform capabilities for use by other customers. Furthermore, the models are not improved through your usage. This means that your data is accessible only by authorized users within your organization unless you explicitly consent to other access or use.

How Copilot protects business information and data

Enterprise-grade AI, powered by Azure OpenAI Service. Copilot is powered by the trusted and compliant Azure OpenAI Service, which provides robust, enterprise-grade security features. These features include content filtering to identify and block output of harmful content and protect against prompt injections (jailbreak attacks), which are user prompts that provoke the generative AI model into behaving in ways it was trained not to. Azure AI services are designed to enhance data governance and privacy and adhere to Microsoft’s strict data protection and privacy standards. Azure OpenAI also supports enterprise features like Azure Policy and AI-based security recommendations by Microsoft Defender for Cloud, meeting compliance requirements with customer-managed data encryption keys and robust governance features.

Built on Microsoft’s comprehensive approach to security, privacy, and compliance. Copilot is integrated into Microsoft Dynamics 365 and Power Platform. It automatically inherits all your company’s valuable security, compliance, and privacy policies and processes. Copilot is hosted within Microsoft Cloud Trust Boundary and adheres to comprehensive, industry-leading compliance, security, and privacy practices. Our handling of Copilot data mirrors our treatment of other customer data, giving you complete autonomy in deciding whether to retain data and determining the specific data elements you wish to keep.

Safeguarded by multiple forms of protection. Customer data is protected by several technologies and processes, including various forms of encryption. Service-side technologies encrypt organizational content at rest and in transit for robust security. Connections are safeguarded with Transport Layer Security (TLS), and data transfers between Dynamics 365, Power Platform, and Azure OpenAI occur over the Microsoft backbone network, ensuring both reliability and safety. Copilot uses industry-standard secure transport protocols when data moves over a network—between user devices and Microsoft datacenters or within the datacenters themselves.

Watch this presentation by James Oleinik for a closer look at how Copilot allows users to securely interact with business data within their context, helping to ensure data remains protected inside the Microsoft Cloud Trust Boundary. You’ll also learn about measures we take to ensure that Copilot is safe for your employees and your data, such as how Copilot isolates business data from the language model so as not to retrain the AI model.

Architected to protect tenant, group, and individual data. We know that data leakage is a concern for customers. Microsoft AI models are not trained on and don’t learn from your tenant data or your prompts unless your tenant admin has opted in to sharing data with us. Within your environment, you can control access through permissions that you set up. Authentication and authorization mechanisms segregate requests to the shared model among tenants. Copilot utilizes data that only you can access. Your data is not available to others.

Committed to building AI responsibly

As your organization explores Copilot for Dynamics 365 and Power Platform, we are committed to delivering the highest levels of security, privacy, compliance, and regulatory commitments, helping you transform into an AI-powered business with confidence.